Over the past year, AI went from a promising concept to something woven into everyday work. It’s also appearing in how bad actors plan and execute attacks.

In our December Monthly Intelligence Report, we review how Anthropic uncovered and disrupted the GTG-1002 espionage campaign, where attackers used AI to automate a large share of the intrusion process. I mention this because the story reflects a broader trend: AI is spreading faster than almost anyone anticipated, and 2026 is sure to bring higher expectations from regulators, insurers, boards, and customers.

So the question we need to answer now is, how do we govern AI in a way that protects the business, supports innovation, and reinforces the trust our customers place in us?

What follows is the starter kit I wish I’d had a year ago. It’s a practical CFO lens on a fast-moving landscape – and I hope it helps other finance leaders anchor the AI conversations they’re having with their own stakeholders.

What “AI Governance” Means for CFOs

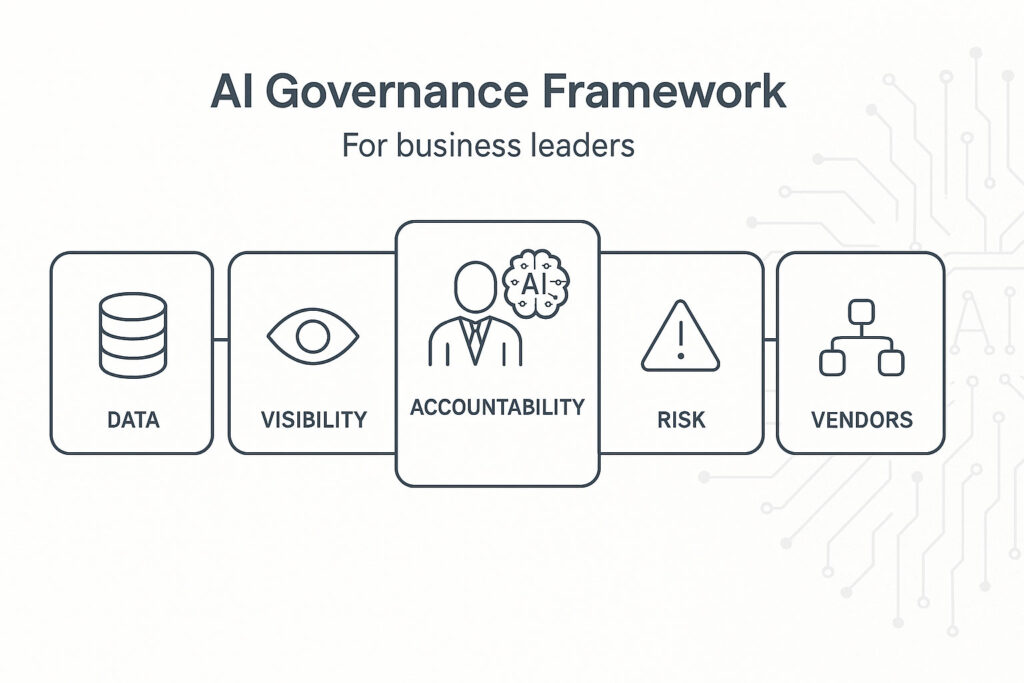

When I think about AI governance, I focus on four things:

- Know where AI is being used.

- Know what data it touches.

- Know who is accountable for decisions and outcomes.

- Know how you’ll demonstrate that governance to auditors, regulators, and your board.

I truly believe governance works best when leaders treat it like a decision-making framework. And because CFOs already own core responsibility for risk, compliance, investment, and business continuity, it falls on us to step up and help guide how AI fits into our org’s risk appetite and long-term plans.

Why This Matters in 2026

Right now, I see three pressures converging:

1. AI adoption is expanding everywhere inside the business.

Employees use Copilot. SaaS tools ship new AI features with little fanfare. Vendors incorporate AI into their workflows. These changes are happening quickly, organically, and usually before policy catches up.

2. Regulatory and audit expectations are sharpening.

SEC guidance, healthcare oversight, and emerging state-level AI requirements all point to the same thing: Organizations must be prepared to demonstrate how they’re governing AI.

3. Attackers are experimenting with automation.

Campaigns like GTG-1002 reveal how AI can accelerate both attacker workflows (including reconnaissance, exploitation, and data analysis) and the scrutiny placed on victims.

This tells me 2026 is clearly the year for CFOs to elevate AI governance from “we should talk about this” to “we have a framework.”

The AI Governance Starter Kit

Five Decisions CFOs Can Take the Lead On

This is the core of the work. The following decisions will help shape how you adopt, monitor, and evaluate AI. Nothing in this starter kit requires you to have technical expertise, only follow-through.

1. Decide what AI can and cannot touch

Start with your data. Identify which categories can be used with AI tools, which require restricted permissions, and which need strict boundaries. Teams will be able to move faster with these expectations spelled out.

This is also the right time to define acceptable error thresholds in processes where AI plays a supporting role. Financial workflows, operational decisions, and customer-facing interactions all have different levels of tolerance. Establish clear guidelines on these to prevent downstream gaps.

2. Decide how you’ll get visibility into AI usage

Every organization needs a working inventory of its AI tools. This includes internal, embedded in SaaS platforms, or introduced through individual workflows. Alongside this inventory, you need a plan for logging how AI interacts with sensitive data and business processes. Both pieces will help you support audits and reviews as well as procurement decisions.

Any dependable inventory system will work. It just needs to be complete enough to answer basic questions when they arrive.

This is where many organizations stall. AI governance can’t live with IT alone, and it can’t live with finance alone. It’s cross-functional by nature.

Involving a small team of leaders helps ensure the right balance of oversight and efficiency. Depending on your internal structure, you could include:

- CFO – for risk appetite, compliance alignment, investment decisions

- CIO/CTO – for architecture and implementation

- CISO – for security, data protection, and third-party exposure

- Legal/Privacy – for regulatory obligations

Shared ownership increases your odds of better decision-making and fewer blind spots.

4. Decide how you’ll handle AI-related incidents

When AI plays a part in a workflow – regardless of whether it’s internal or vendor-driven – it becomes part of the incident record. Update your incident response plans to include questions that can uncover what role AI may play in an event.

Examples include:

- Which AI tools were involved?

- What data did they process?

- Are prompts, outputs, and related logs available?

- Did AI-generated actions influence or accelerate the issue?

Answers to these questions will help investigations move faster and strengthen your position during regulatory and insurance reviews.

5. Decide what you expect from vendors around AI

Vendor environments represent a significant portion of your organization’s risk surface, especially as more of them build AI into their offerings.

At a minimum, CFOs should make sure procurement and vendor management teams are asking strategic vendors to explain:

- Where do you use AI in delivering services to us?

- What data of ours is exposed to your AI models?

- Do you train or fine-tune models on customer data?

- How do you secure and audit your AI usage?

A separate article in this series, [Vendor Risk in the Age of AI], explores this topic in depth with insights from our CISO.

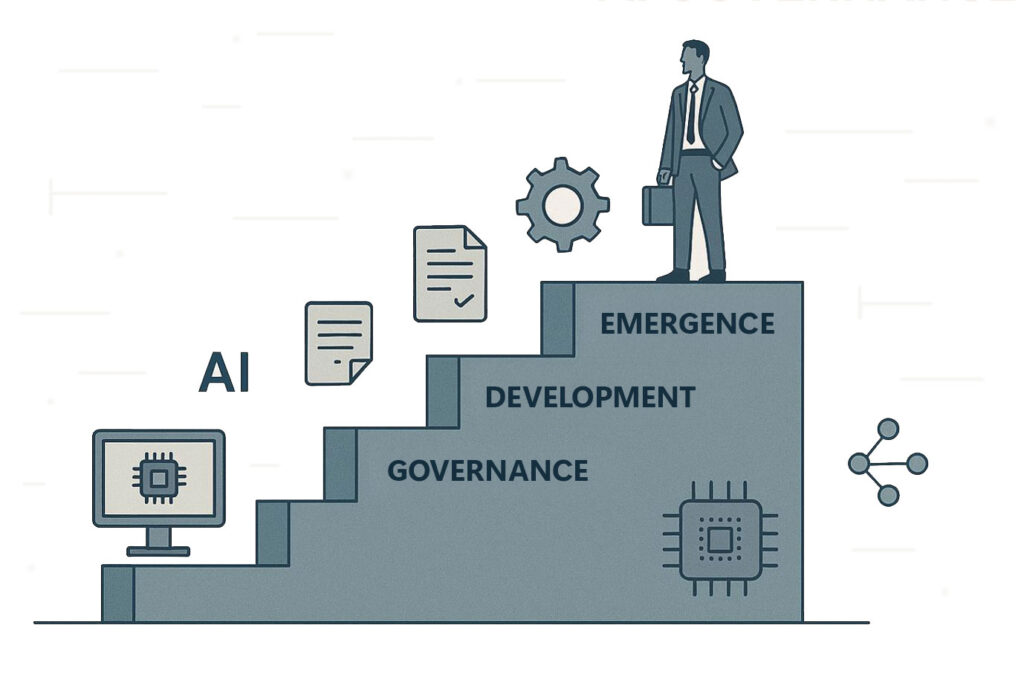

A Quick Maturity Check

Here’s a simple way to self-assess your organization’s AI governance current posture in 15 seconds. What’s your status?

Emerging

- No policy guiding employee AI use

- No inventory of AI tools or data access

- No reviews or documentation of vendor AI usage

Developing

- Policy exists, but incomplete

- Partial AI tool inventory and logging

- Vendors are asked AI-related details during renewals

Governed

- Clear expectations for AI access and usage

- Full inventory + logging across key systems

- AI-aware incident response

- Vendor AI transparency built into procurement

- Board sees regular updates

Wherever you are, steady improvement matters more than immediate completeness.

Where CFOs Often Get Stuck

A few patterns I see repeatedly:

- Waiting for regulations to settle

- Treating AI governance as an IT-owned initiative

- Overlooking AI embedded in SaaS and vendor contracts

- Not defining “acceptable AI error” early

- Assuming visibility exists when it doesn’t

Naming these challenges helps teams move through them and keep the progress going.

Download: The CFO’s AI Governance Checklist for 2026

We’ve assembled a full checklist – including decision points, vendor questions, and policy components – into a short, printable resource for leadership teams.

Download it here, AI Governance Checklist for CFOs: A Practical Starter Guide

Closing Thought

CFOs don’t need deep expertise in AI models or algorithms. What we do need is a dependable governance framework that keeps AI initiatives aligned with our obligations and long-term goals.

The conversations we start now set the tone for how AI will serve our organizations in 2026 and beyond.